What Happened To Programming In The 2010s?

A while ago, I read an article titled "What Happened In The 2010s" by Fred Wilson. The post highlights key changes in technology and business during the last ten years. This inspired me to think about a much more narrow topic: What Happened To Programming In The 2010s?

🚓 I probably forgot like 90% of what actually happened. Please don't sue me. My goal is to reflect on the past so that you can better predict the future.

Where To Start?

From a mile-high perspective, programming is still the same as a decade ago:

- Punch program into editor

- Feed to compiler (or interpreter)

- Bleep Boop 🤖

- Receive output

But if we take a closer look, a lot has changed around us. Many things we take for granted today didn't exist a decade ago.

What Happened Before?

Back in 2009, we wrote jQuery plugins, ran websites on shared hosting services, and uploaded content via FTP. Sometimes code was copy-pasted from dubious forums, tutorials on blogs, or even hand-transcribed from books. Stack Overflow (which launched on 15th of September 2008) was still in its infancy. Version control was done with CVS or SVN — or not at all. I signed up for Github on 3rd of January 2010. Nobody had even heard of a Raspberry Pi (which only got released in 2012).

An Explosion Of New Programming Languages

The last decade saw the creation of a vast number of new and exciting programming languages.

Crystal, Dart, Elixir, Elm, Go, Julia, Kotlin, Nim, Rust, Swift, TypeScript all released their first stable version!

Even more exciting: all of the above languages are developed in the open now, and the source code is freely available on Github. That means, everyone can contribute to their development — a big testament to Open Source.

Each of those languages introduced new ideas that were not widespread before:

- Strong Type Systems: Kotlin and Swift made optional null types mainstream, TypeScript brought types to JavaScript, Algebraic datatypes are common in Kotlin, Swift, TypeScript, and Rust.

- Interoperability: Dart compiles to JavaScript, Elixir interfaces with Erlang, Kotlin with Java, and Swift with Objective-C.

- Better Performance: Go promoted Goroutines and channels for easier concurrency and impressed with a sub-millisecond Garbage Collector, while Rust avoids Garbage Collector overhead altogether thanks to ownership and borrowing.

This is just a short list, but innovation in the programming language field has greatly accelerated.

More Innovation in Older Languages

Established languages didn't stand still either. A few examples:

C++ woke up from its long winter sleep and released C++11 after its last major release in 1998. It introduced numerous new features like Lambdas, auto pointers, and range-based loops to the language.

At the beginning of the last decade, the latest PHP version was 5.3. We're at 7.4 now. (We skipped 6.0, but I'm not ready to talk about it yet.) Along the way, it got over twice as fast. PHP is a truly modern programming language now with a thriving ecosystem.

Heck, even Visual Basic has tuples now. (Sorry, I couldn't resist.)

Faster Release Cycles

Most languages adopted a quicker release cycle. Here's a list for some popular languages:

| Language | Current release cycle |

|---|---|

| C | irregular |

| C# | ~ 12 months |

| C++ | ~ 3 years |

| Go | 6 months |

| Java | 6 months |

| JavaScript (ECMAScript) | 12 months |

| PHP | 12 months |

| Python | 12 months |

| Ruby | 12 months |

| Rust | 6 weeks (!) |

| Swift | 6 months |

| Visual Basic .NET | ~ 24 months |

The Slow Death Of Null

Close to the end of the last decade, in a talk from 25thof August 2009, Tony Hoare described the null pointer as his Billion Dollar Mistake.

A study by the Chromium project found that 70% of their serious security bugs were memory safety problems (same for Microsoft). Fortunately, the notion that our memory safety problem isn't bad coders has finally gained some traction.

Many mainstream languages embraced safer alternatives to null: nullable types, Option, and Result types. Languages like Haskell had these features before, but they only gained popularity in the 2010s.

Revenge of the Type System

Closely related is the debate about type systems. The past decade has seen type systems make their stage comeback; TypeScript, Python, and PHP (just to name a few) started to embrace type systems.

The trend goes towards type inference: add types to make your intent clearer for other humans and in the face of ambiguity — otherwise, skip them. Java, C++, Go, Kotlin, Swift, and Rust are popular examples with type inference support. I can only speak for myself, but I think writing Java has become a lot more ergonomic in the last few years.

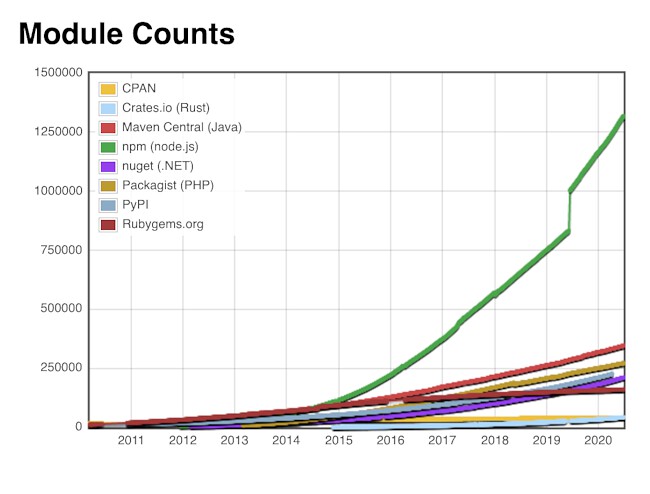

Exponential Growth Of Libraries and Frameworks

As of today, npm hosts 1,330,634 packages. That's over a million packages that somebody else is maintaining for you. Add another 160,488 Ruby gems, 243,984 Python projects, and top it off with 42,547 Rust crates.

Don't ask me what happened to npm in 2019.

Source: Module Counts

Of course, there's the occasional leftpad, but it also means that we have to write less library code ourselves and can focus on business value instead. On the other hand, there are more potential points of failure, and auditing is difficult. There is also a large number of outdated packages. For a more in-depth discussion, I recommend the Census II report by the Linux Foundation & Harvard [PDF].

We also went a bit crazy on frontend frameworks:

No Free Lunch

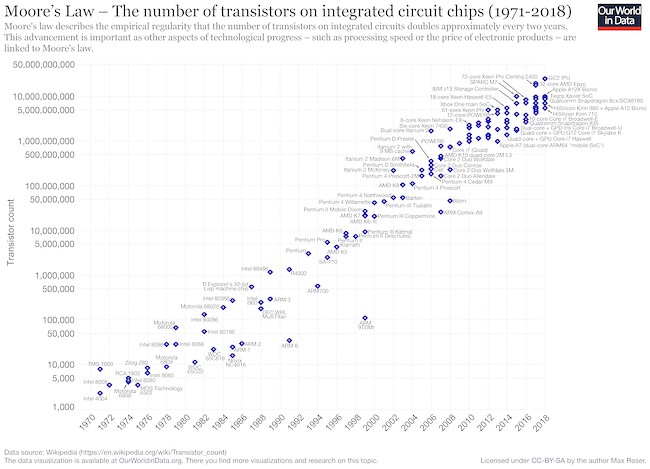

A review like this wouldn't be complete without taking a peek at Moore's Law. It has held up surprisingly well in the last decade:

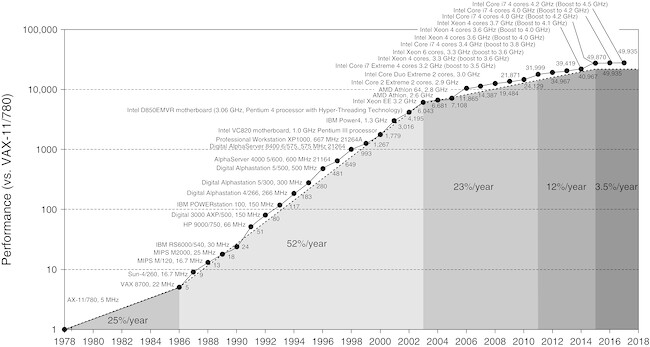

There's a catch, though. Looking at single-core performance, the curve is flattening:

The new transistors prophesied by Moore don’t make our CPUs faster but instead add other kinds of processing capabilities like more parallelism or hardware encryption. There is no free lunch anymore. Engineers have to find new ways of making their applications faster, e.g. by embracing concurrent execution.

Callbacks, coroutines, and eventually async/await are becoming industry standards.

GPUs (Graphical Processing Units) became very powerful, allowing for massively parallel computations, which caused a renaissance of Machine Learning for practical use-cases:

Deep learning becomes feasible, which leads to machine learning becoming integral to many widely used software services and applications. — Timeline of Machine Learning on Wikipedia

Compute is ubiquitous, so in most cases, energy efficiency plays a more prominent role now than raw performance (at least for consumer devices).

Unlikely Twists Of Fate

- Microsoft is a cool kid now. It acquired Github, announced the Windows subsystem for Linux (which should really be called Linux Subsystem for Windows), open sourced MS-DOS and .NET. Even the Microsoft Calculator is now open source.

- IBM acquired Red Hat.

- Linus Torvalds apologized for his behavior, took time off.

- Open source became the default for software development (?).

Learnings

If you're now thinking: Matthias, you totally forgot X, then I brought that point home. This is not even close to everything that happened. You'd roughly need a decade to talk about all of it.

Personally, I'm excited about the next ten years. Software is eating the world — at an ever-faster pace.

Thanks for reading! I mostly write about Rust and my (open-source) projects. If you would like to receive future posts automatically, you can subscribe via RSS or email:

Submit to HN Sponsor me on Github My Amazon wish list

Thanks to Jorge-Luis Betancourt, Simon Brüggen for reviewing drafts of this article.